PostgreSQL: Battle of the UUIDs

One of the more exciting RFCs currently in the proposal stage is an extension to RFC 4122, the UUID standard, to add a time-based UUID variant known as a Version 7 UUID. This new version of the UUID data type is of specific interest to database engineers, as it would allow for a sortable, universally unique identifier to be available, backed by an industry standard.

At face value, an update to a data type sounds like an uninteresting change; why should I care that I can sort UUIDs?

Surely, this is

purely an optimisation that allows the removal of a created_at column from a table. However, you are not the target

audience for this optimisation. The target audience is the database itself (and the nerds who care about them). To understand why the database cares about such improvements, we must first understand how it works.

How a relational database works

A relational database allows a user to store data in a queryable way, where data is divided by entities known as tables.

Each table defines a schema, a collection of columns of a specific type. For example, a table of students may have a

name column of type text, a date_of_birth column of type date, and a score column of type integer. An

individual data point is called a row (or tuple) and represents a collection of values, ordered by each column.

SQL is a language that allows an end-user to query this data and has been the de facto standard for querying data since 1974 - 50 years at the time of writing. Given the prior example of a table of students, a user may want to list all rows stored in the table, which they could achieve with the following query:

SELECT name, date_of_birth, score

FROM students;

But perhaps, more interestingly, a user may want to find all students born after 1990 with the last name of "Jones".

SELECT name, date_of_birth, score

FROM students

WHERE date_of_birth > '1990-01-01'

AND name = 'Jones';

This is remarkably powerful and is no wonder why SQL is at the center of most of the world's data-driven applications. Some simplistic software can even just be thought of as a user interface to a database, with access control and display logic layered on top.

However, how does a database know how to find the data? Indexes. Similar to the index of an old Encyclopedia, a

database index is a data structure that allows a database to cheat and locate data much faster than searching each

row. Indexes are defined for a specific column; for example, if a database has an index on the name column,

the database server will maintain a secondary data structure for indexing on the name column.

Without indexes, if an end user wanted to list all students with the last name of "Jones", the database would have to perform a full table scan to assess each data point in the table and see if it matches the user's criteria. This can be a quick process for small tables, but the time taken to perform a full table scan grows linearly as the table grows. This is why database optimisation is essential, as some problems will only be discovered once the table grows to a specific size.

Alternatively, suppose the database has an index on the name column before executing the query. In this case, the database engine would first use the index to

find all rows with the last name of "Jones" before falling back to a full table scan to find all rows with a date of

birth after 1990. In this example, a full table scan is still performed, but it is only performed on a subset of the table

and, therefore, is much faster.

Internally, the most basic index could be a sorted list of all values in the target column, mapped to the row ID of the row that contains the value. A database engine could then apply a search algorithm, such as binary search, to find the row ID(s) that match the index.

Using the students query as an example, the database engine would use the index to find all rows with the last name of

"Jones" using the index below. The algorithm would make two comparisons ("Smith" followed by "Jones") to return a subset

of the table that contains all rows with the last name of "Jones" before performing a full table scan to find all rows

with a date of birth after 1990. In reality, the database engine would use a more complex algorithm, such as a B-tree

(the default in Postgres), but the concept is the same.

Andrew, 1

Bob, 2

Smith, 3

Jones, 4

Xavier, 5

If indexes are so great, why can't we just index every column? Unfortunately, great power comes with great

responsibility cost. As indexes are a secondary data structure, they take up additional disk space for each defined

index. This is wasteful if the index is not used, especially as each index needs to be updated when a row is inserted,

updated or deleted - leading to more storage costs and slower write performance. Shudders

But what does this have to do with UUIDs?

Each table in a database usually has a "primary key", which is a column that uniquely identifies each row in the table -

which is indexed. This allows a user to quickly reference a specific row in a table, for example, for updates & deletes.

In the students table example, let's assume that the name column is the primary key. At the end of the first

semester, we can insert the student's test scores into the database. If we converted our table to a CSV file, it would

look like this:

name,date_of_birth,score

Andrew,1990-01-01,30

Bob,1991-01-01,53

Smith,1992-01-01,68

Jones,1993-01-01,78

Xavier,1994-01-01,59

A new student joins the class in the second semester - and we have a problem. Their name is "Andrew", and we already have a student with that name. In theory, we could insert a row with the same name. However, it would wreak havoc years later when we try to aggregate the specific students' scores, as we would have to remember which "Andrew" is which using the date of birth column.

Instead, we could insert a new column to the table to use as the primary key - student_id. This would allow us

to uniquely identify each student and simplify our lives in the future. However, we now have a new challenge: What

type of data should we store in the student_id column?

Auto-incrementing integers

The most common solution is to keep a number that increments for each row inserted into the table. Our

first student would have a student_id of 1, and our second would have a student_id of 2. This is a

battle-tested solution and is used by many databases by default. In PostgreSQL, this is known as a SERIAL column.

CREATE TABLE students

(

student_id SERIAL PRIMARY KEY,

name TEXT NOT NULL,

date_of_birth DATE NOT NULL,

score INTEGER NOT NULL

);

PostgreSQL will automatically generate and output this number when we insert a new row into the table.

This is usually a good solution, as it is simple and efficient to understand. However, it does have two key drawbacks:

The database must be consulted to generate the primary key. The end user cannot know the following available number and must rely on the database to generate it for them. This introduces a potential bottleneck, especially if we have many users inserting rows into the table simultaneously, as the database must pause between each insert to generate the next number to ensure uniqueness.

This also ties our application to that specific database and the specific database server in charge of generating the following number. For large-scale systems (such as a social network), this is a problem as a user in Europe must use the database server hosted in Australia to ensure the generated number is unique. Network latency is a genuine concern when optimising for a global audience - a network round trip between London and Sydney is 250ms, over the 100ms threshold considered "instant" to an average user.

Additionally, by exposing the ID to the end user, we are exposing the ID of their data and each previous ID. Imagine an

e-commerce system; as a competitor, I could place a small order and see my order ID as #100. I can make another small

order and generate the order ID #150 a week later. I can then infer that the company has made 50 orders in

the past week. Additionally, I could see if the user interface tries to stop me from looking at other order IDs that aren't mine but are valid.

UUIDs

An improvement over database-generated IDs is to use a UUID, a universally unique identifier typically

generated

at the application layer. A UUID is a 128-bit number with several different versions that define how the number is

generated. A human-readable representation of a UUID is a 36-character string, with four dashes to separate the other

parts of a UUID, for example: 9d42718a-c67d-478a-91ab-b5cc9182e122.

The most common version of a UUID is the Version 4 variant, which is composed of entirely pseudo-random data. This is the default UUID in most libraries and alleviates most of the concerns with auto-incrementing integers.

As UUIDs are generated at the application layer with a high degree of randomness, the end user of the database does not need to check in with a central authority to see if the ID is unique. In a global system, this means an end user in London can connect to a database server closer to them and know that a user in Sydney will not generate the same ID.

I am using absolute terms here; however, there is a minuscule chance that two UUIDs generated by different users will be the same. However, that chance is 1 in 2.71 x 10^18. Therefore, I've chosen not to care about that chance, by words better put by Jonathan Hall.

Additionally, as Version 4 UUIDs are composed of entirely pseudo-random data, it is not possible to infer the number of generations between two UUIDs.

The problem with UUIDs

As the most common version of a UUID is entirely pseudo-random, by helping our application engineers ease their scaling concerns, we are introducing a new problem for our database engineers and our database engine. Random UUIDs result in random access patterns, and therefore weaken our indexes compared with auto-incrementing integers.

Imagine our simplistic index from earlier, using binary search and a sorted list of values:

029e6a44-0478-4240-9611-71efccadfc81

089bacf1-12b9-4b48-b2f9-9567292f4473

71e54e78-d9de-4999-9d46-48fd689ab013

a5963192-15df-41a2-9167-c36c0e37b7da

e88ba56c-0745-419e-8690-9b124790c78d

Using the above example, if we wanted to find the row with the UUID a5963192-15df-41a2-9167-c36c0e37b7da, we could use

binary search to find the ID in 2 comparisons. This is a very fast operation when the entire index is in memory.

However, if our index grew to 1 million rows, we couldn't store the entire index in memory. Instead, the database engine would have to read the index in chunks from disk and perform a binary search on each chunk. By using, UUIDs, the index is evenly distributed across the entire index. Therefore, the database engine would have to consistently read data from the disk to perform the search.

Most commonly, recent data stored in the database is accessed more frequently, which allows auto-incrementing integers to be much more efficient. As data in the index is skewed towards the end of the index, and in typical cases, the database engine would have to read less frequently from the disk. This is because the database can cache the last read chunks from the disk in memory, and check if the requested ID is in memory first before checking the disk.

A read from memory is typically around 250us while a read from an SSD is around 1000us - which is four times slower,

according to

Jeff Dean's talk

in 2012.

UUID Version 7: A new hope

A proposed extension to the UUID standard is an introduction of a time-based UUID, known as UUID Version 7. In contrast to UUID Version 4, UUID Version 7 reserves the first 48 bits of the ID for a timestamp. This reduces the entropy of the UUID; however, it results in a UUID that is sortable by generation time. For example, the two UUIDs below were generated one after the other:

- 018d5298-759c-7b78-801e-2f5176778d4f

- 018d5299-07f4-7730-a4be-be1bc4a35ee6

As the first 48 bits of the UUID are reserved for a timestamp, we can see that the first seven characters of the UUID are the same, as they were generated within 10 minutes of each other.

While this addition to the UUID standard is still in draft, it generates a lot of excitement in software engineering

circles. It has already been adopted in some software. For example, a popular Golang UUID library, google/uuid has

added native support for UUID Version 7, but it is not yet the default.

Version 7 UUIDs can keep our indexes happy while allowing our application layer to scale horizontally without a central authority. This is because our indexes can sort the UUIDs and skew the most recent data towards the end of the index, allowing the database engine to read less frequently from the disk - similar to auto-incrementing integers.

Our cybersecurity engineers are also relatively happy, as we are not exposing the number of generations between two IDs. However, depending on the nature of the data, we may still be exposing too much information. For example, in an application where users get support about sensitive topics, we may want to keep the date their user account was created secret from other users.

Benchmarking the difference

However, how much of a difference does this actually make? UUID Version 4 has existed since the early 2000s, so should engineers rush to change their existing UUIDs to UUID Version 7?

To test this for myself, I used pgbench to benchmark tables' select performance with a primary key of UUID Version

4 compared with a UUID Version 7 across varying row sizes.

Methodology

I created the database server using Azure Database for PostgreSQL - Flexible Server, with the following configuration:

- Standard_D4ads_v5

- Premium SSD P10

- PostgreSQL 16.0

This configuration was chosen as a basic production-ready configuration. I could then create six tables of the same schema:

create table public.uuid_v7_100

(

id uuid not null

constraint uuid_v7_100_pk

primary key,

score integer not null,

first_name text not null,

last_name text not null

);

-

uuid_v4_100: UUID Version 4, 100 rows -

uuid_v4_100000: UUID Version 4, 100,000 rows -

uuid_v4_1000000: UUID Version 4, 1,000,000 rows -

uuid_v7_100: UUID Version 7, 100 rows -

uuid_v7_100000: UUID Version 7, 100,000 rows -

uuid_v7_1000000: UUID Version 7, 1,000,000 rows

And use a small Python script to fill each table with random data using the corresponding UUID version.

With the baseline data in place, I used pgbench to run a benchmark on each table, using a simple script that selected

five random rows. I ran the benchmark three times for each table and took the average of the results.

> pgbench -n -f 4_100.sql -h myserver.postgres.database.azure.com -p 5432 -U adminuser uuid_test -c 10 -j 2 -T 60

PGBench is a built-in benchmarking tool for PostgreSQL that called my script ten times concurrently for 60 seconds against the database server and nicely outputted the results.

Results

Size

Before dividing into the query performance, I wanted to see how much space each table took up on disk. As indexes are a secondary data structure, they take up additional space on disk - and the data type of the index will affect this.

| Table | Size | Size Reduction |

|---|---|---|

| uuid_v4_100 | 32 kB | 0% |

| uuid_v7_100 | 32 kB | 0% |

| uuid_v4_100000 | 11 MB | 100% |

| uuid_v7_100000 | 9656 kB | 87.78% |

| uuid_v4_1000000 | 101 MB | 100% |

| uuid_v7_1000000 | 94 MB | 93.07% |

The following SQL query was used to calculate the size of the table:

SELECT pg_size_pretty(pg_total_relation_size('uuid_v4_100')) as uuid_v4_100,

pg_size_pretty(pg_total_relation_size('uuid_v7_100')) as uuid_v7_100,

pg_size_pretty(pg_total_relation_size('uuid_v4_100000')) as uuid_v4_100000,

pg_size_pretty(pg_total_relation_size('uuid_v7_100000')) as uuid_v7_100000,

pg_size_pretty(pg_total_relation_size('uuid_v4_1000000')) as uuid_v4_1000000,

pg_size_pretty(pg_total_relation_size('uuid_v7_1000000')) as uuid_v7_1000000;

The UUID Version 7 tables are slightly smaller on larger sizes but the same on smaller sizes. This is unsurprising, as with ultra-small data sets, the data is not larger than a single block on disk - which in Postgres is 32kb.

Select Query Performance

The following table shows the average latency in milliseconds for each table, taken from pgbench:

| Table | Average Latency (ms): Run 1 | Run 2 | Run 3 | Average |

|---|---|---|---|---|

| uuid_v4_100 | 99.422 | 99.633 | 99.487 | 99.514 |

| uuid_v7_100 | 99.126 | 99.616 | 98.823 | 99.183 |

| uuid_v4_100000 | 97.891 | 98.700 | 97.026 | 97.872 |

| uuid_v7_100000 | 96.329 | 97.537 | 99.365 | 97.744 |

| uuid_v4_1000000 | 98.749 | 99.480 | 99.675 | 99.301 |

| uuid_v7_1000000 | 97.180 | 97.686 | 99.439 | 98.102 |

Total results:

| Row count | Version 4 Average Latency (ms) | Version 7 Average Latency (ms) | Difference |

|---|---|---|---|

| 100 | 99.514 | 99.183 | 99.66% |

| 100,000 | 97.872 | 97.744 | 99.86% |

| 1,000,000 | 99.301 | 98.102 | 98.79% |

These results show a slight performance improvement when using Version 7 UUIDs; however, from this benchmark, it is a less than 1.5% improvement. This may be due to the small size of the data, the artificial data used (all UUIDs were generated at a very similar time); however, many production databases are below 1,000,000 rows.

A micro-optimisation

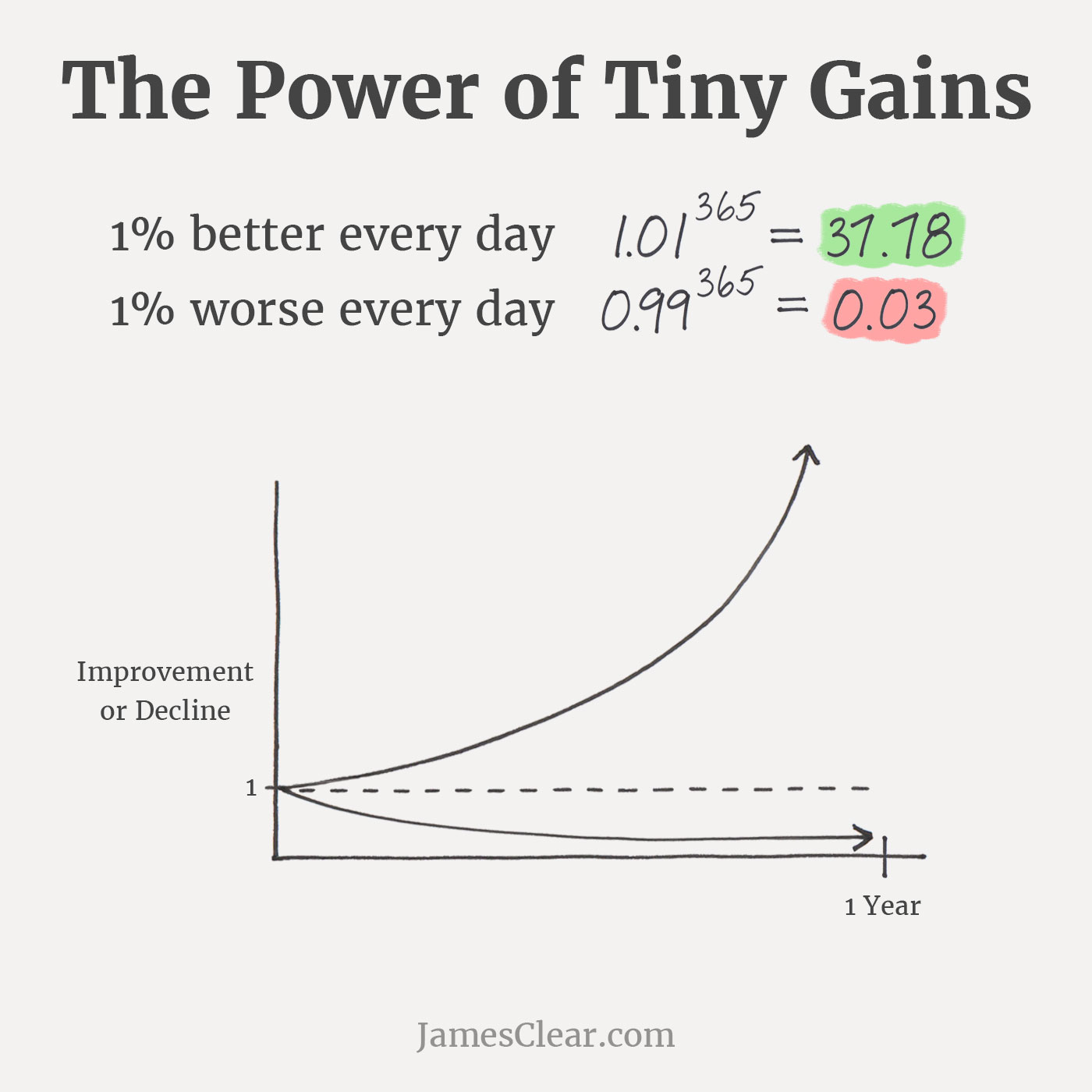

Version 7 UUIDs are often touted as a game-changer for database engineers or a looming messiah that will speed up databases across the globe. However, the difference is not earth-shattering for small to medium-sized data sets. But that doesn't mean we should ignore it.

Much has been written about the power of tiny improvements and how continuous micro improvements can, over time, lead to a substantial improvement. The cost of switching to UUID Version 7, as long as the trade-offs are understood, is an engineer's favourite type of work, an "easy win".